“I don’t care if it works on your machine! We are not shipping your machine!”

— Vidiu Platon.

Introduction

Whether we are considering testing related to Software / IT environment, we can distinguish two kinds of testing which are not always exclusive, but they can, under certain circumstances, be used in parallel on the same system, for different purposes. We are talking about Manual Testing and Automated Testing.

Testing purpose can spread from the “simple” bugs finding to the assessment of user-friendliness, and it depends pretty much on the approach the stakeholders want to follow. A tester can be involved from the Development phase (AGILE / DevOps methodology), providing constant feedback on any new feature implemented, driving the whole Software Lifecycle from the beginning. There are development methodologies which are releasing little pieces of code in order to allow a tester to verify it during the overall development process, identifying any problem before the final release.

Let’s now consider more in-depth the two kinds of testing:

Manual Testing

The manual one is a kind of testing where software is tested manually by a specific individual. It can be performed to discover bugs during software development, but also providing feedback about the User Friendliness, and suggesting how such application could be improved to make it both easier to be used and more reliable.

Depending on the criticality and application area of the software, the tester follows a more or less strict approach to verify/test every feature of the system; He can use either a Risk-Based approach (especially for those systems/Software used in Life Science environment with a GxP criticality) or a more free and explorative methodology (which allows the tester to use his own fantasy in order to discover how the system could be improved; not only finding out bugs).

Whatever approach the tester uses, after the test cases execution, he will be generating a test report without the help of any automated testing tool.

Automated Testing

In automated testing, a tester has to write code to enable a testing software to execute the test scripts.

Automated testing relies on coded test scripts where the testing tool compares the expected result with the actual result (the outcome of the specific test step). The tool itself will then generate a test report, pointing out any success or fail at both step and script level (whenever different scripts are applicable), allowing the analyst to determine whether the application is performing as expected.

While manual testing allows the tester to use his own fantasy to perform his tests, being more elastic exploring the application, this is not the case for an automation testing tool, where the scripts are executed exactly how they are coded, running repetitive tasks without the intervention of a human resource.

This is good in any of those cases where the scripts are suited to and can be executed in a static and repetitive manner, continuing over a certain period or throughout the whole software lifecycle. This happens for regression testing, which is conducted on a periodic basis or whenever a new feature is implemented, and the product owner wants to ensure the old code is still performing as expected after the update.

Automated testing still requires at least a first considerable effort from an automation engineer who has to code the scripts for the first time. The same requirement re-emerges, every time something is changed in the software. This will require an adaptation on the pre-scripted test.

Pros and Cons

Automated testing is significantly faster than a manual approach and does not require resources to be run except for the initial scripts coding effort; on the contrary, manual testing is time-consuming and requires human resources for every execution. However, we have to consider that automation does not allow random or explorative testing and is not useful when you need to understand how and where the software can be improved or how easy it is to be used by an end-user. Also, initial effort in automation is higher than in manual testing as it requires an automation engineer who will be coding the scripts. Any change in the script need to be coded, whilst a manual tester would be able to incorporate change more easily. Despite that, in the long run, and for all of those tasks which are redundant and repetitive, the return on investment is higher and in favour of automation testing.

Automated testing is also more reliable as it avoids human errors which could occur during a manual run, allowing to avoid repetitive tasks which could lead a manual tester to get bored, hence elevating the risk of mistakes.

Reading that it seems that automation has an edge here, after all. However, in reality it’s not really the case since, as stated, automation cannot be used in every case and, whether a software is subject to frequents changes, it will require even more effort from the automation engineer who has to constantly update the coded scripts, while a manual tester can just address the changes within the test specification, without being thwarted too much. Consider testing a system which is, as part of the script, recalling a specific data which can be moved or changed as part of the usual business activity. The testing tool will not be able to understand that it is just a change in the data so it will be pointed out as a fail which will be needed for a manual check. We have also to say that, at least, the tester will be pointed directly to that specific failure, sparing him from the complete run review. What is happening if the data changes are frequent and huge though? Is it still worth to run an automated script?

Also, sometimes testing is used to learn about a software’s behaviour, exploring it. This training aspect can’t be obtained through automated testing.

Conclusion

In conclusion, I would say that using manual or automation testing is pretty much dependent on the environment. They are not even exclusive and can be used in synergy. On a specific system, regression testing can be planned on a periodic basis while, manual testing, will be executed every time any new feature is implemented (before to add it as part of the automated script). There are also specific verifications where which it is better to execute manually, than a daily changing data comparison. That’s said, the same testing effort can be split as both automated and manual.

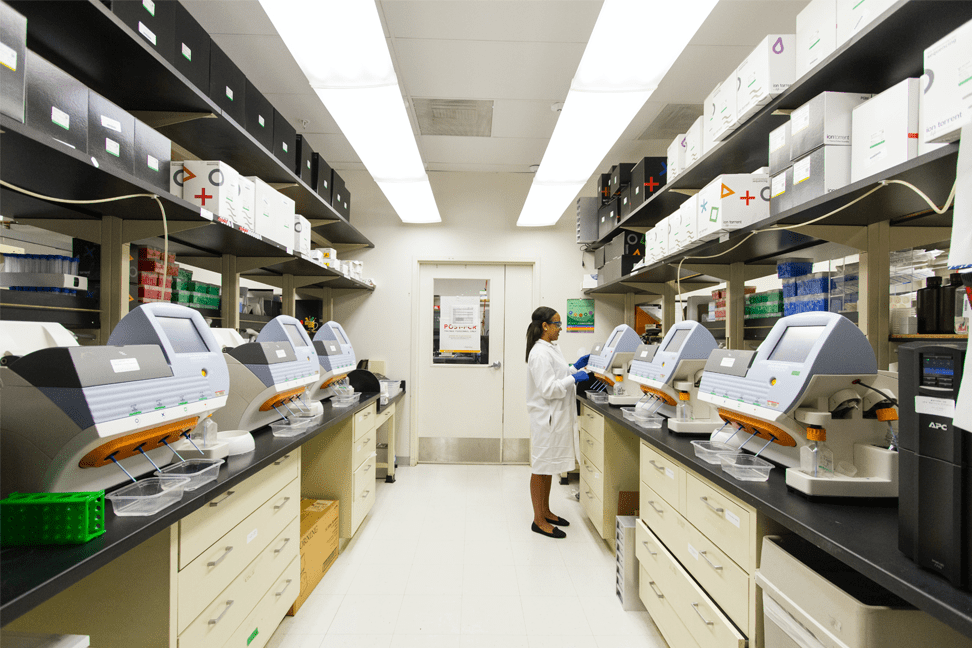

There are also testing activities where automation is not really worthwhile. For example, in very restricted environments (such as pharma or medical devices), systems need to be validated prior to being allowed for normal business usage. This validation is a really complex and schematic approach which is following a risk-based methodology and need a direct study from the QA engineer about the platform to determine the risk criticality. The first validation is, in fact, something that needs to be done manually, as it’s not worth coding any script for just one run. After the system is validated, an automated regression test can be considered and planned as part of a periodical verification.

Finally, we have just focused on software testing, but we need also to mention those environments where systems are strictly interfaced with equipment and manufacturing machines. In this case, is not always possible to split the software testing from the equipment usage, therefore, manual testing is required as the only applicable testing methodology.

Are you interested in our consulting services? You can get in contact with us by writing an email to contact@kvalito.ch

Author: Marco Polisena, Life Science Consultant