Part I: Overview

These days human life is touching Artificial Intelligence (AI) in one way or the other, for example, by searching for the next holiday destination over the internet, following Netflix recommendations on movies, asking Alexa if an umbrella is needed today or dreaming of a self-driving future car. Did you ever realise that these applications rely on AI — and do you understand how it works? Are you aware that regulators are putting efforts to up their game to regulate AI and ML better?

This series of articles provides an outline of what AI/ML are, how they are getting adopted into the Life Sciences industry, what are the regulations around AI/ML, which are the moves regulators are most likely to make, and the challenges of adopting and integrating AI. The first section of this paper will discuss fundamental concepts and the pros and cons of AI.

What are Artificial Intelligence (AI) and Machine Learning (ML)?

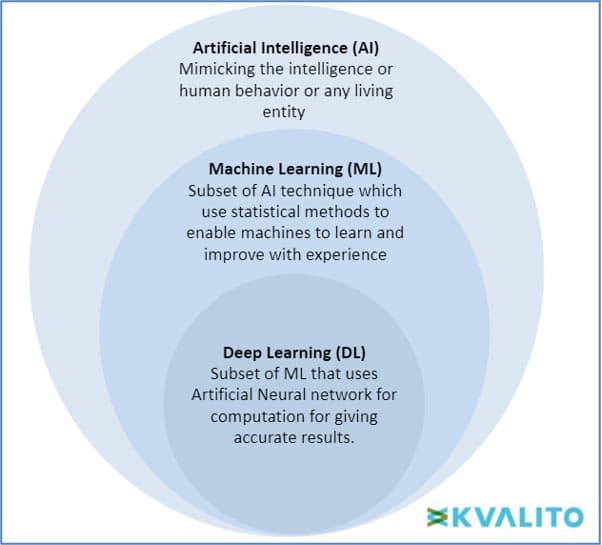

AI (Artificial Intelligence) is defined as a machine’s ability to perform cognitive functions we associate with human minds, such as problem-solving and learning. Al can be used on robotics and autonomous vehicles.

Machine Learning (ML) Machine learning can be defined as the section of AI where the main objective is to build and understand methods which can ‘learn,’ i.e., based on some given sample datasets (known as ‘training set’), the model can make decisions or predictions for the population data on its own without being explicitly programmed. There are three major ML types: supervised learning, unsupervised learning and reinforcement learning. In Supervised learning, the algorithm works on a labelled dataset and provides an answer to the human-asked problem; in Unsupervised learning, the algorithm works on unlabelled data, and the algorithm only tries to explain the dataset by recognising data features and patterns on its own. For reinforcement learning, an algorithm learns to perform a task in attempting to maximise the rewards it receives for its actions.

Deep learning is a subset of ML that requires fewer data pre-processing by humans and can process a broader range of data resources; meanwhile, it can produce results more accurately than a traditional ML approach. There are many interconnected layers of software-based calculators called neurons that form a neural network, which can ingest a large amount of input data at each layer and learn increasingly complex data features at the layer.

Figure 1: Hierarchy of Artificial Intelligence (AI), Machine Learning (ML) and Deep Learning (DL) copyright KVALITO Consulting Group 2022.

How AI plays a role in our daily lives and the mechanism behind it

General examples of AI applications are web search engines (Google), self-driving cars (Tesla), recommendation systems (Amazon, Netflix) and understanding human speech (Alexa, Siri). A more specific example of the application of AI and its pronounced advantage to data science in rapid analysis of large amounts of data was recently published by BioNTech in cooperation with A.I. company InstaDeep from London. According to their article, they will be able to spot potentially high-risk variants of the SARS-CoV-2 Virus through the application of artificial intelligence [R1]. The system is based on structural modelling methods and natural language processing techniques (AI) by using metadata like globally available sequence data and experimental data. We can assess some patterns of ‘immune escape potential’ requires in vitro neutralisation tests involving serum from vaccinated subjects or serum from patients previously infected with other variants of SARS-CoV-2 and binding affinity with a human receptor angiotensin-converting enzyme 2 (ACE2) – host infection acceptor. The variant spike protein sequence data are analysed, and high-risk variants are predicted based on their Immune-Escape and ACE2-Binding-Score. Both scores are relevant for how fast the variant can spread out and how easy the virus can enter body cells (Immune escape and fitness potential). Based on this data analysis, vaccine production can be easily adapted to the high-risk variant sequence, and health authorities and politics can be alerted before the first occurrence of a high-risk SARS-CoV-2 variant in society.

Potential of AI/ML in the Healthcare Industry

AI/ML is widely used in healthcare applications and medical devices to improve clinical efficiency and accuracy. It is expected that by 2022, the global application of AI in healthcare industries will grow to over USD 8 Billion. What makes AI tools and systems so advantageous in the dynamic healthcare industry?

- Highly useful for low-dimensional problems, i.e., settings with a limited number of potential covariates, predictors or small samples which often arise in healthcare industries.

- ML is more explanatory and less dependent on a prior or historical hypothesis or assumptions.

- An effective application of AI/ML technologies can transform commercial strategies to a considerable extent which in time can give an edge to decision-makers in the market.

- Advanced AL/ML techniques ensure efficient delivery of proofs of concept and solutions as well as technical expertise.

- Health-Tech can be hugely manipulated and modelled by various sophisticated AI/ML algorithms.

What is Software as a Medical Device (SaMD)

According to the International Medical Device Regulators Forum (IMDRF) [R2], Software as a Medical Device (SaMD) is defined as software intended to be used for one or more medical purposes that perform these purposes without being part of a hardware medical device. In short, it is a software or set of software which can be treated as a medical device. A surge in the application of AI/ML-based SaMD has been driven by the increased use of Artificial Intelligence and Machine Learning (AI/ML) in medical devices. AI techniques used in SaMD are divided into two segments: Machine Learning (ML) and Deep Learning (DL).

There are various fields of medicine where SaMD is being used extensively. Some of the applications of SaMD platforms can be categorised as:

- Screening and diagnosis

- Monitoring and alerting

- Chronic condition and disease management

- Digital therapeutics

Advantages of AI/ML and Applications

As the algorithms learn from newer and larger datasets every day, this leads to automatic alteration in the algorithms; hence, the algorithms can make better decisions cumulatively over time. Here comes the notion of unsupervised algorithms, which are self-sufficient for upgradations.

Examples of AI/ML-based SaMD:

- Myocardial Infarction study: An adaptive unsupervised algorithm was applied and trained on a dataset of approximately 3000 patients. It was then tested on 8000 patients who were myocardial infarction suspects. Surprisingly, it gave better prediction and diagnosis of myocardial infarction among the patients than actual physicians as the algorithm was evolving when it was learning from new data sources.

- Radiological Applications of AI: Computer-aided detection and diagnosis software (CADe & CADx) analyses radiological images to suggest clinically relevant findings and aid diagnostic decisions. Computer-aided triage (CADt) software analyses images to prioritise the review of images for patients with potentially time-sensitive findings. For example, Skin Cancer Detection is an imaging system that uses algorithms to give patients diagnostic information for skin cancer.

- Adult Congenital Heart Disease (ACHD) Detection: The clinical & demographic data of 10,019 adult patients were collected to build specific required DL models, which helps to categorise the patients according to various factors such as disease complexity and diagnostic group. This study has shown that deep learning models were 90% accurate for categorising and predicting the disease.

Confronting risks of AI/ML

Al has been applied widely to enhance healthcare delivery, from day-to-day operations to the world of healthcare innovation. However, it is a double-edged sword, and this problem often arises from the data and the respective algorithm or model used to train the AI. When AI acquires partial data, it might amplify the bias and increase the potential scale of bias. A minor mistake or flaw in the algorithm or training dataset could lead to millions of people being affected, expose companies to class-action lawsuits, and jeopardise their reputations.

A summary of four main AI/ML-related risks:

Infancy stage of regulation. There are no concrete guidelines currently established, and the industry is looking for confirmed guidelines for AI/ML. In the current situation, the industry is still adhering to the traditional Computer System Validation guidelines, where details are not yet clear on how the usage of SaaS and PaaS technologies like AWS are affected.

Data difficulties. Increasing unstructured data from social media and the Internet of Things (IoT) has elevated the difficulty of ingesting, sorting, linking, and using data correctly. Consequently, users are vulnerable to pitfalls like inadvertently using or disclosing sensitive information. For instance, although the patient’s name had been reduced from one section of a medical record used by an AI system, it could be present in the doctor’s notes section of the record. Another risk is data drift, which means data inputs shift unexpectedly, leading to the degradation of AI performance. Therefore, stakeholders must be highly aware and adhere to applicable Data Privacy and Health Authority Regulations depending on the data collection, storage and processing activities.

Technology troubles. The performance of AI systems can be negatively impacted by technology and process issues across the entire operating environment. As an illustration, a financial institution has experienced trouble due to the compliance software being unable to detect trading issues because all customer trades were no longer included in the data feeds.

In-built bias. Training data is the primary source of problems as AI learns from training data and improves its performance over time. This may lead to in-built AI bias. Therefore, AI transparency is required for manufacturers to disclose and show the attributes of training data that they fed to the AI and explain their mechanism of AI. Besides, the usage and storage of training data that need to be used need to be highly regulated. According to CSV guidelines, data from the higher environment such as Prod should not be moved to lower environments such as QA or retraining areas for AI/ML; this requires an amendment in the SOP. A study published by Science in 2019 revealed in-built AI bias as the study showed the healthcare prediction algorithm utilised by insurance companies and hospitals across the US for identifying high-risk patients was far less likely to select black patients for “high-risk care management” programs [R3].

Security snags. Another rising risk is the possibility of fraudsters exploiting seemingly innocuous marketing, health, and financial data collected by businesses to power AI systems. These threads can be stitched to construct fraudulent identities if there is a lack of security measures. Even though the target companies (who may be good at protecting personally identifiable information) are unwitting accomplices, they may still face consumer backlash and regulatory penalties.

Conclusion

Many headlines have been published lately to illustrate the unintended consequences of new machine-learning models. Undoubtedly, new regulations are required in this area, and these will surely will the compliance burden on manufacturers. Still, it will provide clarity to consumers, minimise the risk of litigation for compliant organisations and enhance SaMD manufacturers’ confidence level in innovating while leveraging AI in the healthcare sector to its fullest potential. Therefore, FDA and EU are making tentative moves to regulate healthcare AI given the growing reliance on AI and, in particular, machine learning. This will be discussed in the following article, ‘Taking Shape: Artificial Intelligence Regulation and its impact on CSV/CSA (II).

References:

[R1] – Karim Beguir, Marcin J. Skwark, Yunguan Fu, Thomas Pierrot, Nicolas Lopez Carranza, Alexandre Laterre, Ibtissem Kadri, Bonny Gaby Lui, Bianca Sänger, Yunpeng Liu, Asaf Poran, Alexander Muik, Ugur Sahin – Early Computational Detection of Potential High Risk SARS-CoV-2 Variants

[R2] – International Medical Device Regulators Forum (IMDRF): https://www.fda.gov/medical-devices/cdrh-international-programs/international-medical-device-regulators-forum-imdrf https://www.imdrf.org/

[R3] – ZIAD OBERMEYER, BRIAN POWERSCHRISTINE VOGELIAND SENDHIL MULLAINATHAN – Dissecting racial bias in an algorithm used to manage the health of populations, 2019 https://www.science.org/doi/10.1126/science.aax2342

Authors: Aritra Chackrabarti, Dr. Anna Lena Hürter, Jocelyn Chee, Karunakar Budema

KVALITO is a strategic partner and global quality and compliance service and network for regulated industries. To find out more, please visit us at www.kvalito.ch . If you would like to benefit from KVALITO’s expert services, please send us an email at contact@kvalito.ch. Are you looking for an exciting and challenging position as a consultant, or maybe you are an ambitious student/graduate looking for an internship? Please send your complete application to recruiting@kvalito.ch.

Read the other articles of the series:

Taking Shape: Artificial Intelligence Regulation and its impact on CSV/CSA (II)

Taking Shape: Artificial Intelligence Regulation and its impact on CSV/CSA (III)