Part III: Overview

Overview of CSV for AI-based systems

Artificial Intelligence (AI) refers to the simulation of human intelligence processes by machines, especially computer systems. AI-based systems are software systems with functionalities enabled by at least one AI component (e.g., for image-and speech recognition and autonomous driving). AI-based systems are becoming pervasive in society due to advances in AI. With AI becoming increasingly embedded in the fabric of business and our everyday lives, both corporations and consumer-advocacy groups have lobbied for more explicit rules to ensure that it is used relatively. Failure of an AI element can lead to system failure [R1], hence the need for AI verification and validation (V&V).

This article provides an overview of how machine learning components or subsystems “fit” into the systems engineering framework in Pharma, and Life Sciences Domain identifies characteristics of AI subsystems creating challenges in their V&V, illuminates those challenges, and provides some potential solutions while noting open or continuing areas of research in the V&V of AI subsystems.

AI-based System Development Life Cycle: V&V activities.

AI-based systems have a different life cycle than traditional systems, and the V&V activities occur throughout their entire life cycle. Validation is the key to ensuring that patient safety, product quality and data integrity are guaranteed. Of course, also efficacy is tested, and the models must be validated before initial deployment, and then must be continuously monitored and adapted upon implementation to ensure that the intended operation is performed.

Some definitions related to data are needed to understand the AI System Development Life Cycle flow:

- Train data: It is a huge dataset that is used to teach a machine learning model how to act.

- Test data: It is typically used to verify that a given set of inputs to a given algorithm produces some expected result.

- Data Validation: Based on the accuracy of prediction or decision-making of the model on the test data set, the model is validated using specific techniques.

- Operational Data: It is the data which is produced by the daily performance of the machine learning model.

The SDLC process goes through different steps:

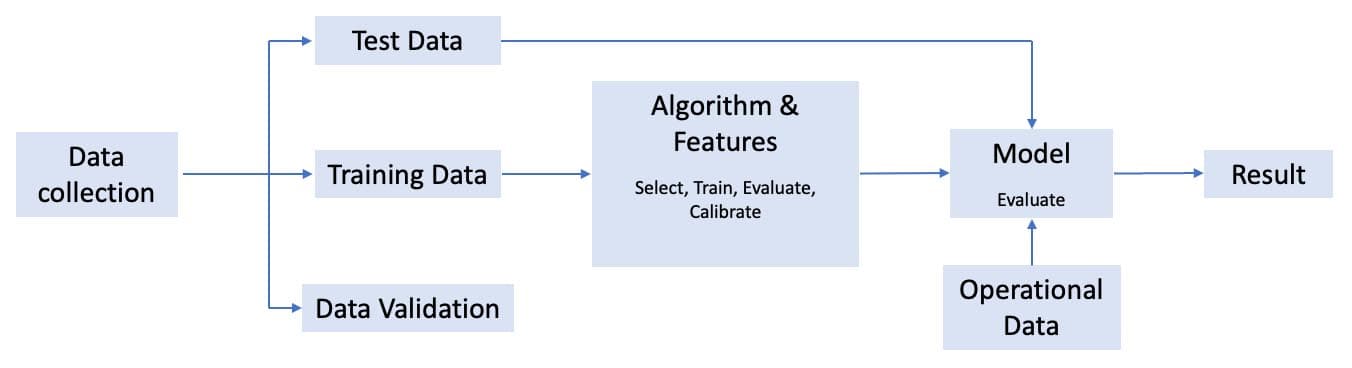

Figure 1: General AI System Development Life Cycle.

- Data Collection Stage: It is the process of gathering information from all the relevant sources to find a solution to the research problem.

- Selection Stage: In this stage, the collected data points are selected to be partitioned into a training set and testing set for the purpose of algorithm building.

- Training Stage: In this stage, the train set data points are used for the model building and from this given information, the model ‘learns’.

- Evaluation Stage: It is the stage where after the model has been trained, it is then evaluated and validated over a pre-defined set of data points known as a train set.

- Calibration Stage: It is the stage responsible for comparing the actual output and the expected output given by a system.

AI Operational Cycle

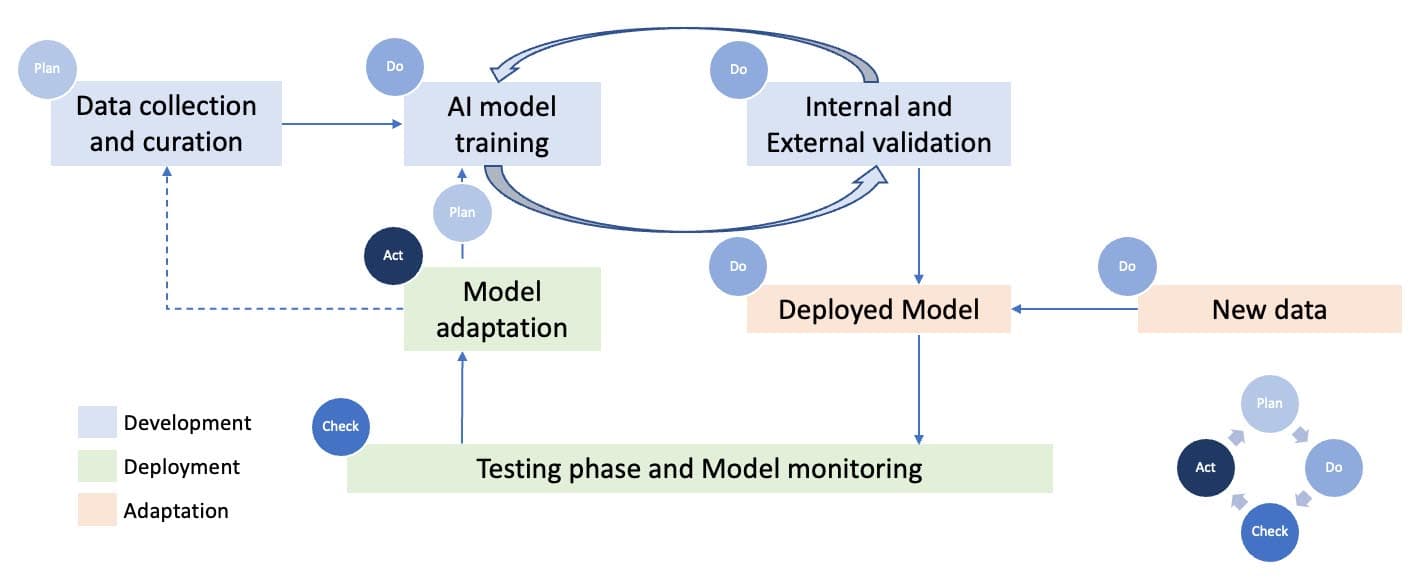

We can divide the AI Operational Life Cycle using the Deming Cycle:

- Plan Stage: At this stage, the statistician or concerned professional selects the data collection sources and techniques and decides on the features/parameters of the data which need to be studied by the fitted model.

- Do Stage: At this stage, after the required data is collected and the objective is known, the model is trained based on this data, using suited AI/ML or statistical algorithms, and then these models pass through several steps of internal and external validations based on a partition of the data known as test data. After this, the model can be used to predict and generate new data sets.

- Check Stage: At this stage, the functionality and the accuracy of the model predictions have constantly been monitored for corrections and improvements.

- Acting Stage: In the end, the model is suited enough to be used for the desired purpose of the user, and it can be self-adapting to changes (unsupervised) if required.

Figure 2: General AI Operational Life Cycle.

CSV for AI/ML systems

Computer system validation is the process performed for getting documented evidence, to a high degree of assurance, that a computer system performs its intended functions accurately and reliably in the Pharma and Life Science domain. Health Authorities across the world have regulated the environment by using, e.g., FDA’s 21 CFR Part 11 [R2], 21 CFR Part 820.70 [R3], and EU’s EudraLex Annex 11 Volume 4 [R4], Guidelines such as Good Automated Manufacturing Practice (GAMP® 5, [R5]) provide the tools to comply with the above regulations.

With the advent of AI /ML technologies into the Lifesciences domain, The U.S. Food and Drug Administration (FDA), Health Canada, and the United Kingdom’s Medicines and Healthcare products Regulatory Agency (MHRA) have jointly identified ten guiding principles that can inform the development of Good Machine Learning Practice (GMLP). You can check these principles in Kvalito’s document: “Taking Shape: Artificial Intelligence Regulation and its impact on CSV/CSA (II).”

CSV involves the formalised development, testing, and maintenance of GxP-applicable business computer systems such as SAP. When companies choose to use electronic systems, they must validate them according to the Code of Federal Regulations Part 820.70(a) [R3].

1.- Nature of AI that leads to challenges in CSV

Ishikawa et al. (2019) [R6] conducted a questionnaire survey and identified the characteristics that elevate the difficulties of CSV of AI-ML-based systems.

- Lack of oracle: AI-ML systems aim to discover patterns hidden in millions of individual data. Therefore, it is impossible to know what an expected result is or clearly pre-define the correctness criteria for system outputs.

- Imperfection and Accuracy: It is intrinsically impossible to make adequate outputs for various inputs (i.e., 100% accuracy).

- Behaviour Uncertainty for Untested Data: The uncertainty is high about how the system behaves in response to untested input data, such as radical change of the behaviour by a slight change in the input (adversarial examples).

- High Dependency of Behavior on Training Data: The system behaviour is highly dependent on the training data. However, data drift might occur when the training date changes. Data drift is defined as a variation in the production data from the data used to test and validate the model before deploying it in production. The change in input data or independent variable leads to poor performance of the model and degrades the model accuracy over time. If data drift is not identified on time, the predictions will go wrong and negatively impact.

- Openness of Inputs, Environments, and Requirements: It is challenging to consider carefully because there are enormous numbers of inputs to the system, target environments, and implicit user requirements.

2.- Current Approaches and Standards of CSV

According to FDA, CSV is defined as “Confirmation by examination and provision of objective evidence that software specifications conform to user needs and intended uses, and that the particular requirements implemented through software can be consistently fulfilled.”

In other words, CSV establishes documented evidence providing a high degree of assurance that a specific computerised process or operation will consistently produce a quality result meeting its predetermined specifications. CSV is required when a new system is being configured, or some changes are being made in a pre-validated system.

A classic and popular approach which can be discussed for the validation of projects is by using the V Diagram (popularised by organisations like ISPE)

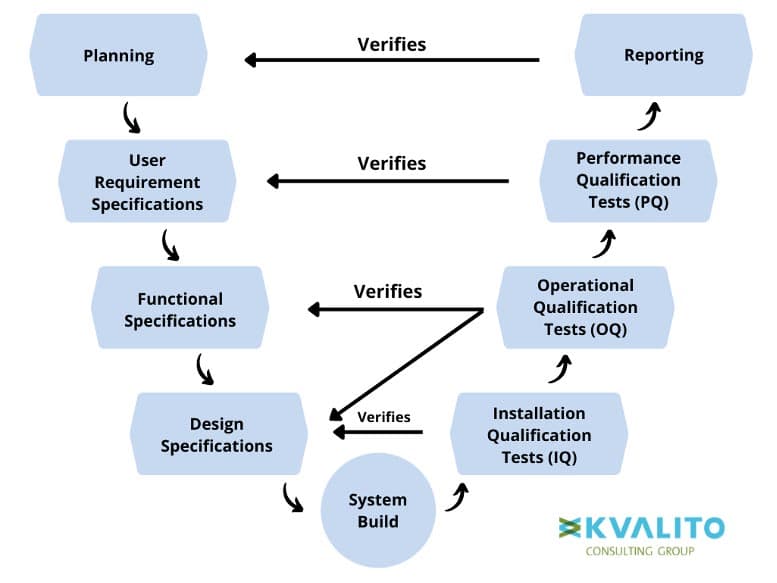

Figure 3: V-Diagram (Methodology)

According to the diagram, the process starts at the Planning phase and then moves as follows:

- User Requirement Specifications describe the user’s needs from the software and contain the different constraints such as regulations, safety requirements, etc.

- Function Specifications describe how the software needs to work and look to meet the user’s needs.

- Design Specifications contain all the technical elements of the software or systems.

- System Build is the step where the desired software is produced or purchased and then configured on a system to previous specifications.

- Installation Qualification tests provide confirmation (by evidence) that the software or system is installed and set up according to the Design Specification.

- OQ tests confirm and document that all functionality defined in the Functional Specification is present and working correctly and that there are no bugs.

- PQ testing confirms and documents that the software effectively meets the users’ needs and is suitable for their intended use, as defined in the User Requirements Specification.

- The Cycle ends with Reporting, the last step in the validation method, where a summary report or system certification is recorded.

Now, these steps can be followed for an AI-based system, but in that case, V&V occurs throughout the life cycle, which states the primary difference between conventional and AI-based systems.

The most important factor here is the data validation: AI systems are entirely dependent on the dataset associated, so the quality of the data (accuracy, currency and timeliness, correctness, consistency, usability, security and privacy, accessibility, accountability, scalability, lack of bias, coverage of the state space) should be examined beforehand. Data validation steps can include file validation, import validation, domain validation, transformation validation, aggregation rule and business validation [R7’].

V&V Approaches

Some of the most used approaches for Validation and Verification are:

- Metamorphic testing to test ML algorithms, addressing the oracle problem (Xie et al., 2011). [R8]

- ML test scores consist of tests for features and data and monitoring tests for ML (Breck et al., 2016). [R9]

- Checking for inconsistency in the outcome of the desired behaviour of the software and searching for worst-case outcomes when testing consistency with specifications.

- Corroborative verification [R10], in which several verification methods work at different levels of abstraction and are applied to the same AI component.

V&V Standards: Standard Development Organizations (SDO) are working on standards in AI. Some of them are mentioned below:

- ISO, an international SDO, set up an expert group to carry out standardisation activities for AI. Subcommittee 42 is part of the joint technical committee ISO/IEC JTC 1. It has a working group on foundational standards to provide a framework and a common vocabulary and several other working groups on computational approaches to and characteristics of AI systems, trustworthiness, use cases, applications, and big data.

- Other than ISO, IEEE P7000[R11] project by IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems, ANSI/UL 4600 [R12] by Underwriters Laboratory, SAE G-34 [R13] are some other significant SDO’s works for developing AI system standards.

Conclusion:

In this final article of the series, we learned about AI-based systems and discussed Computer System Validation (CSV) for them. An extensive idea was transferred to the readers about the primary differences between AI-based and conventional systems. We have also discussed the several challenges an AI system may cause in the CSV world alongside its current approaches for verification and validation (V&V) of the systems and how the different international SDOs are trying to work on more concrete standards and regulations for AI systems.

References:

R1 – Dreossi, T., A. Donzé, S.A. Seshia. Compositional falsification of cyber-physical systems with machine learning components. In Barrett, C., M. Davies, T. Kahsai (eds.) NFM 2017. LNCS, vol. 10227, pp. 357-372. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-57288-8_26

R2 – FDA 21CFR part 11, see Part 11, Electronic Records; Electronic Signatures – Scope and Application | FDA

R3 – CFR Part 820.70, see FDA CFR Part 820.70 R4- EU GMP Vol. 4 Part I Chapter 4 : Documentation, see EudraLex – Volume 4 (europa.eu)

R5 – GAMP® 5 Appendix D1, see GAMP 5 — Table of Contents (ispe.org)

R6 – Rie Ishikawa, Chiaki Uchida, Shiho Kitaoka, Tomoyuki Furuyashiki & Satoshi Kida – Improvement of PTSD-like behaviour by the forgetting effect of hippocampal neurogenesis enhancer memantine in a social defeat stress paradigm, August 2019

R8 – Xiaoyuan Xie, Joshua W.K.Ho, Christian Murphy, Gail Kaiser, Baowen Xu, Tsong Yueh Chen – Testing and validating machine learning classifiers by metamorphic testing, April 201

R9 – Eric Breck, Shanqing Cai, Eric Nielsen, Michael Salib, D. Sculley – What’s your ML test score? A rubric for ML production systems, 2016

R10 – R.K.Webster, S.K.Brooks, L.E.Smith, L.Woodland, S.Wessely, G.J.Rubin. How to improve adherence with quarantine: a rapid review of the evidence, 2020

R11 – Model Process for Addressing Ethical Concerns During System Design, IEEE Global Initiative, 2021

R12 – ANSI/UL 4600: Standard for Safety for the Evaluation of Autonomous Products, April 2020

R13 – SAE G34 Artificial Intelligence in Aviation – AIR6994 – Use Case AIR, September 2021

Authors: Aritra Chackrabarti, Dr. Anna Lena Hürter, Jocelyn Chee, Karunakar Budema

KVALITO is a strategic partner and global quality and compliance service and network for regulated industries. To find out more, please visit us at www.kvalito.ch . If you would like to benefit from KVALITO’s expert services, please send us an email at contact@kvalito.ch. Are you looking for an exciting and challenging position as a consultant, or maybe you are an ambitious student/graduate looking for an internship? Please send your complete application to recruiting@kvalito.ch.

Read the other articles of the series:

Taking Shape: Artificial Intelligence Regulation and its impact on CSV/CSA (I)

Taking Shape: Artificial Intelligence Regulation and its impact on CSV/CSA (II)